While we're still quite some time away from a “true” internet of things, i.e. globally interconnected system of systems interacting with each other at an almost cognitive state, the IoT revolution will not happen as some sudden unveiling at MWC but, rather like similar technology “leaps”, there will be a gradual evolution of the networks and services we enjoy today. There is a similar discussion ongoing which is familiar to those following the development of 5G; will it be an improvement of something existing or something brand new? Shades of grey I say.

…and the development of the IoT vision is so tightly coupled with 5G I'm not sure we can distinguish one from the other at certain points.

The principal idea of IoT is, as we know, nothing new but as we move into 2016 there is a clear difference in the tone/pitch/intensity of the topic. Those of you subscribing to telecoms newsletters, e.g. Fierce or RCR, will perhaps have noticed almost every issue touches on IoT nowadays. A completely subjective conclusion, shot from the hip, but may I venture a guess that you agree?

I'd like to zero in our point of view when it comes to any new wireless technology; quality of experience, and try to define this in the context of IoT. While IoT will spell gold for those who manage to tune into the disruptive business opportunities IoT can provide, our question then becomes one of ensuring that this gold is of the highest carat, i.e. maximizing quality of experience and operational efficiency for these IoT entrepreneurs.

Assuring the quality of IoT services

So how about we extrapolate our experiences so far and see what it might tell us about assuring quality of IoT services?

No hardware, please!

If there is anything the deployment of IMS & VoLTE has shown us it's that any IoT test and monitoring approach needs to be more parts software than hardware for it to scale. While using actual sensors, gateways, and user devices, embedded or consumer products are essential to capture the true user experience, and testing and monitoring the majority of nodes/interfaces/protocols/services needs to be completely virtualized. Many of the benefits will be the same as we are seeing today. Good thing the industry as a whole already seems to agree that NFV/SDN is essential, so the mindset is already here.

Compounded complexity

With IoT, not only will new protocols, operating systems and nodes rise in relevance, but we will also see a mix of these being used across the end-to-end service chain. Hence testing end-to-end will be paramount.

Monitoring a single network node will not give you the complete picture… and the self-healing nature of today's network will not help with troubleshooting. Intermittent problems here today, gone tomorrow… only to resurface later whenever? Surely.

Automate, automate, automate

Just adding a single protocol, IMS, gave us millions and millions of possible call flows to test as we migrate to packet-based voice services. Continuously and proactively hunting for issues which will impact SLA's, 24x7x365, needs to be more or less automated. Experts will be in short supply and their time has to be well spent. Collecting data must follow a paradigm of speed and simplicity.

A well-tuned air interface

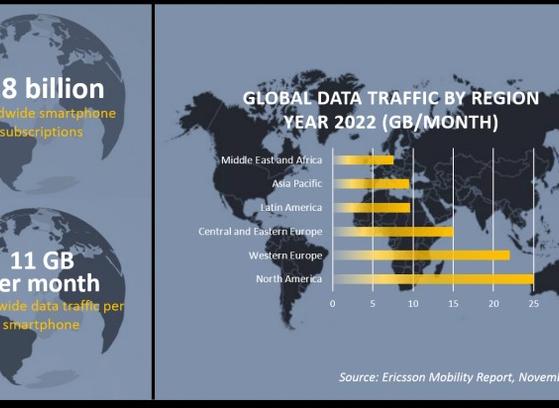

The latest Ericsson Mobility Report has one page dedicated to IoT (will we see an expansion next year?) which indicates that in only 5 years the majority of connected M2M devices will be a non-3GPP (cellular) device. Anyone care to speculate how many of these will use a cellular network as a backbone? Holler if you have any data on the area, but how about a lot?

A common scenario in home security/smart home solutions seen today is the concept of capillary networks where the local network of sensors etc are connected via proprietary technologies to a gateway of some type, which in turn is connected either via WiFi or cellular as internet connectivity backhaul. With cellular comes the convenience of AAA but how essential will Soft SIM‘s become for the IoT cellular application? The idea of capillary networks also fits conveniently with the concept ofedge computing.

Old news really

The above thoughts are admittedly speculative and generic but we're about to get more specific in the coming weeks. Until then, I leave you with some snapshots of M2M at work, with remote gas meters connected to TEMS Investigation, used to test & measure the perspective of the gas meter when installed on site.

Strategize for spectrum acquisition and spectrum re-farming based on meeting customer experience targets, not network element threshold KPIs.